Supercharging Performance with Partitioning in Databricks and Spark (Part 1/3)

Why Every Data Engineer Needs to Understand Partitioning!

This is the first article out of three covering one of the most important features of Spark and Databricks: Partitioning.

This first chapter will focus on the general theory of partitioning and partitioning in Spark.

Part 2 will go into the specifics of table partitioning and we will prepare our dataset.

Part 3 will cover an in-depth case study and carry out performance comparisons.

Introduction

When it comes to data, the concept of “big data” is often defined by the 3 V’s (or sometimes more, depending on the source): Volume, Variety, and Velocity.

Volume pertains to the sheer size of the dataset, which can go up to terabytes and beyond

Variety refers to the challenge of handling diverse data types and sources, which must be effectively aggregated

Velocity, on the other hand, concerns the speed at which data is received; even 100mb files can quickly accumulate into a vast amount of data if they are received every second

To develop high-performing solutions for handling “big data”, there are various techniques and patterns at our disposal. One such technique is Partitioning. When dealing with large datasets, the processing power of individual machines can become limiting, necessitating the use of distributed and parallel processing capabilities provided by platforms such as Databricks and tools like Spark.

Partitioning is the key to making this possible. It involves dividing a large dataset into smaller, more manageable pieces known as partitions.

In distributed data processing systems like Databricks, partitions are employed to distribute data across multiple nodes, enabling parallel processing and heightened performance.

By breaking data into partitions, each one can be processed independently, leading to quicker processing times and greater scalability.

Partitioning can also help balance the workload across nodes, reduce data movement, and minimize the impact of any skew or imbalance in the data.

On the other hand, inefficient partitioning can lead to performance bottlenecks, wasted resources, and longer processing times. Therefore, choosing the right partitioning strategy is crucial to optimizing performance in distributed data processing systems like Databricks.

Understanding Partitioning in Databricks and Spark

First, we need to differentiate between partitioning on a DataFrame / RDD level and partitioning on table level.

RDDs and DataFrames

The compute engine of Databricks is Spark. When we use SQL, Scala, R or Python to code in Databricks, we merely use their respective API’s to access the underlying Spark engine.

Spark uses RDDs (Resilient Distributed Datasets) as its fundamental data structure. As their name suggests, RDDs are distributed datasets. Nowadays, it is not as popular to work with RDDs directly but rather with the DataFrame API, which provides DataFrame objects as higher-level abstractions of RDDs and allows us to name columns. Therefore, DataFrames are similar to tables in a traditional relational database. Moreover, DataFrames offer many performance optimization options.

When we load our data into such a DataFrame, it is already partitioned. We can see this in the screenshot down below. If I access the underlying RDD of the DataFrame, we can get the number of partitions by using .rdd.getNumPartitions().

When working with RDDs or DataFrames, Spark automatically handles the partitioning process, even if we didn’t explicitly specify it. It is important to mention that when importing data from an already partitioned table, the existing partitioning structure is taken into consideration.

In the screenshot above I am loading data from an unpartitioned table, meaning that Spark will handle the partitioning based on several configurations we will cover below.

We also need to distinguish between partitions and files because they are not the same thing: Partitions refer to the logical divisions of data in a distributed computing environment. Files, on the other hand, are the actual storage units of data on disk.

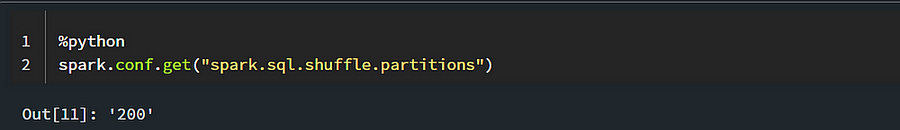

When performing certain operations that require shuffling the data, Spark splits the data into partitions based on the “spark.sql.shuffle.partitions” configuration.

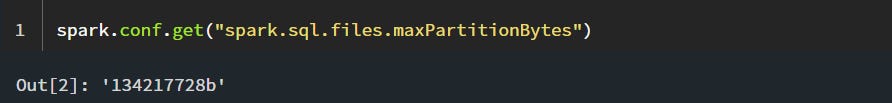

However, this same property also controls the number of partitions when simply reading the data, as shown in the first screenshot. By default, this is set to 200, which means Spark will attempt to create 200 partitions. Nonetheless, the “spark.sql.files.maxPartitionBytes” configuration also imposes a limit on this process.

The actual storage size of each partition depends on various factors, such as available memory and the size of the dataset. However, Databricks creates partitions with a maximum size defined by the “spark.sql.files.maxPartitionBytes” configuration property. By default, this value is set to 128MB.

Another way to control the size of each DataFrame partition, is to repartition a DataFrame using the .repartition() or .coalesce() methods and specify the number of partitions / or the columns which we want to partition by.

repartition() is used to increase or decrease the number of partitions of a DataFrame or RDD. When we use .repartition(), Spark shuffles the data and creates new partitions based on the specified number of partitions

coalesce() is used to decrease the number of partitions of a DataFrame or RDD. When we use .coalesce(), Spark tries to combine existing partitions to create new partitions. Unlike repartition(), coalesce() does not shuffle the data.

Summary

Partitioning is an essential technique for building performant “big data” solutions and choosing the right partitioning strategy is crucial to achieving good performance and scalability. However, if we don’t have the resources to investigate the best partitioning setup, we are better off using Databricks’s native optimization settings and options.

There isn’t a universal solution for every table, as priorities and objectives need to be balanced. Each scenario requires a tailored approach to address its requirements and challenges.

Partitioning on a DataFrame / RDD level is concerned with distributing data across cluster nodes for parallel processing, while partitioning on table level focuses on organizing data within storage systems to optimize query performance.

Now that we have understood the importance of Partitioning and how RDDs and DataFrames are partitioned, in the next article we will cover how Tables in Databricks are partitioned and we will prepare the data for our final chapter.

Thank you for reading!