Azure Databricks in the Enterprise Context: Networking

A Comprehensive Overview of Network Security and Compliance with Databricks

Preface: Databricks in the Enterprise Context

Databricks can be an amazing platform for developing solutions for data engineering, data science, and data analytics. It abstracts many of the cumbersome infrastructure tasks required to manage a self-hosted Spark environment and offers numerous additional capabilities beyond an execution environment for Python, Scala, R, or SQL code.

For personal projects, it is easy to create a Databricks instance, set up a cluster, and get straight to development.

However, in an enterprise context, there are many additional topics that are critical for gaining permission to use Databricks and then using it effectively:

Networking

Governance:

Cost Management:

Monitoring

DevOps and IaC

Data Quality

and so many more…

Since first working with Azure and Databricks in 2019, I have greatly enjoyed building solutions on both platforms and consulting others on how to do it too.

In this series, I will present my own learnings, summarise the documentation in a structured way and identify critical points companies need to take into consideration.

Introduction

The first topic we are exploring in this series is networking, as it’s a critical aspect of security and compliance. For example, even if user credentials get compromised, IP access lists, firewalls, and private connectivity can still prevent unauthorised access to sensitive data.

Apart from that, some corporations have strict guidelines to prevent any traffic over the public internet and must ensure that all traffic goes through the backbone network of their cloud provider.

At the same time, if you are working in an established environment, these topics can help you better understand what might be configured behind the scenes and potentially causing issues.

Databricks Managed Resource Group

When we create a Databricks resource in Azure, it automatically provisions a managed resource group where the virtual machines for the clusters will be created.

The Databricks File System (DBFS), and therefore managed tables, are also stored in the storage account within this resource group.

We can choose the name of the managed resource group during the workspace creation as seen in the image below.

The setup varies depending on whether we:

… create a private (secure cluster connectivity enabled — no public IP) or public workspace

… deploy Databricks in our own dedicated Virtual Network (VNet) or let it create its own virtual network (the default option).

We will cover what exactly these mean further down below, but seeing these examples can help you better understand the details.

Public Databricks workspace in managed VNet

If we choose a public instance, the managed resource group will include a managed identity, a storage account, virtual networks for the workers, and a network security group (NSG).

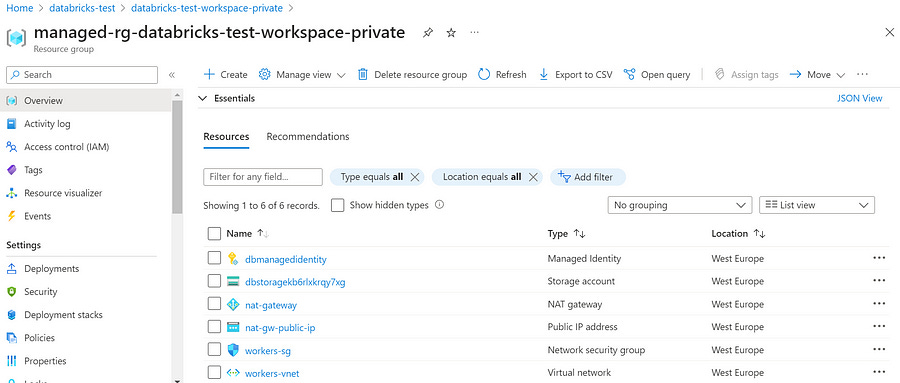

Private Databricks workspace in managed VNet

If we opt for a private instance and let Databricks create its own virtual network, it will also contain a NAT gateway and a public IP address which are necessary to facilitate outbound connectivity to the internet.

This setup allows for secure communication with external services while maintaining the isolation of the internal network.

Public Databricks workspace in self-managed VNet (VNet injection)

For a public workspace in our own virtual networks, only a managed identity and a storage account will be included. The workers will be secured by the VNet we have deployed, so no new VNet or NSG will be created.

In this case, the unity-catalog-access-connector is also deployed because I chose a premium workspace instead of a standard one.

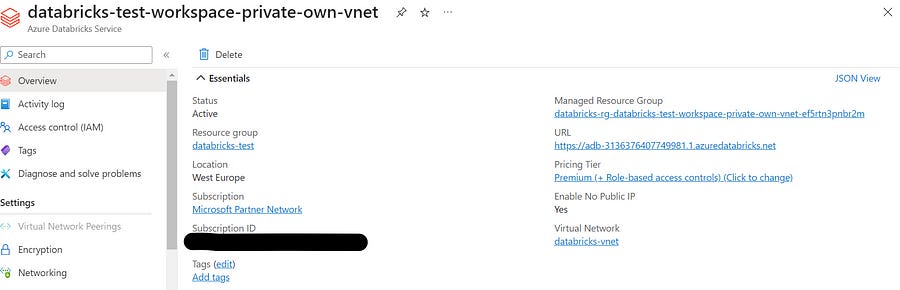

Private Databricks workspace in self-managed VNet (VNet injection)

If we choose to create a private instance in our own virtual network, Microsoft still recommends to setting up a stable outbound IP address.

When we enable secure cluster connectivity, clusters have no public IP addresses and the workspace subnets are private subnets. Having a stable IP address then enables us to add it to allow lists of external services.

Moreover, Microsoft announced it will retire default outbound access connectivity for virtual machines in 2025, so its highly recommended to set up stable outbound IP address for workspaces with secure cluster connectivity enabled.

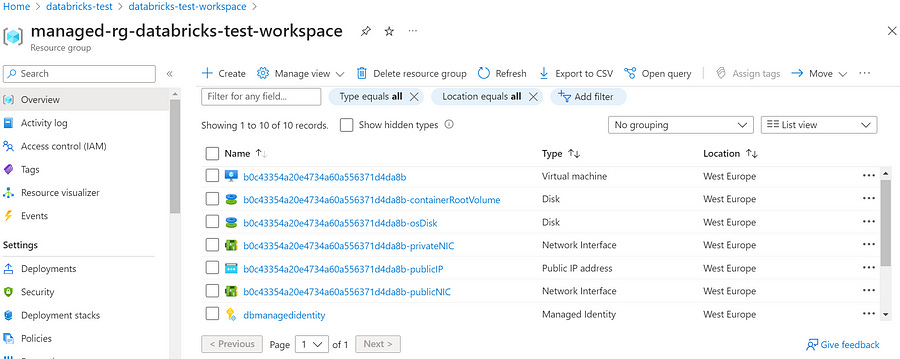

Regardless of the setup, once we create a cluster within our workspace, we will see the virtual machines, disks, and network interfaces associated with the clusters inside of the managed resource group.

The Databricks Architecture

By default, Databricks already provides a secure networking environment, but organisations might have detailed requirements for:

Understanding how Databricks works to ensure it is in line with their guidelines.

Configuring and/or modifying certain aspects to ensure compliance.

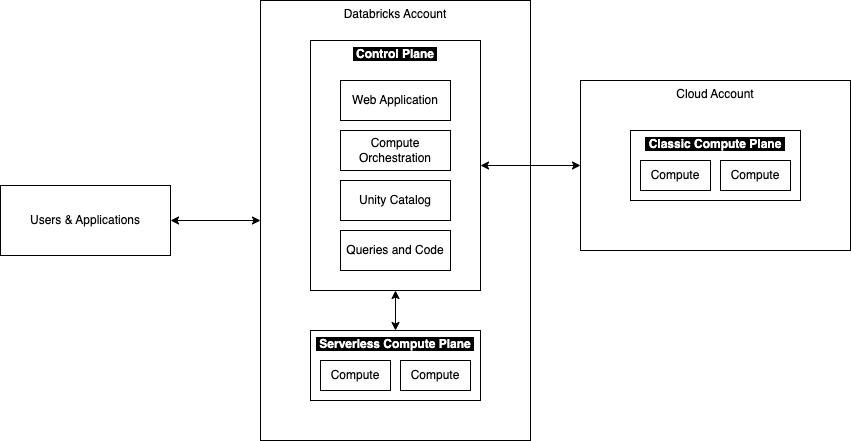

Databricks has two main components:

The Control Plane:

The control plane contains the backend services for the web application, the code, the Unity Catalog, and the orchestration of the compute resources.

The Compute Plane:

Classic Compute Plane: Clusters are deployed inside the managed resource group as Azure resources and connect to the control plane.

Serverless Compute Plane: Serverless workloads are not created as standalone Azure resources but as part of the compute plane.

When evaluating network setups, we are concerned about four main questions:

How users and applications connect to Databricks.

How classical compute resources such as clusters connect to the control plane and other Azure resources

How serverless compute resources connect to the control plane.

How we can connect Databricks to on-premise resources or other cloud providers.

1. Users and Applications to Databricks

Apart from authentication and access control, we can secure our Databricks workspaces in three additional ways:

Private Connectivity (Requires Premium Plan)

Using Azure Private Link, we can ensure that traffic from other Azure VNets and on-premise networks runs through the Microsoft backbone network.

For Azure Databricks, we can use Azure Private Link to enable users and other apps to reach the Databricks instance via VNet interfaces called Azure Private Endpoints, which then allow access to the Databricks control plane.

There are three Private Link Connection Types:

Front-end Private Link (User to Workspace): This enables users to connect to the web application, REST API and Databricks Connect API via private endpoints.

Back-end Private Link (Compute Plane to Control Plane): This enables clusters in self-managed VNets to access the secure cluster connectivity relay endpoint through a private connection.

Browser Authentication Private Endpoint: This is required for enabling private front-end connection from clients that have no public network access but is also recommended for clients that do have internet access.

It is also possible to completely restrict access from the public internet to Azure Databricks but only if both Front- and Back-end Private Links are implemented.

For any of the connection types, there are several requirements that need to satisfied:

Premium or Enterprise Databricks workspace

The workspace must use VNet injection (self-managed VNet) and satisfy all the requirements for that

Apart from the requirements for VNet injection, for implementing Front- and Back-end Private Links, the VNet must contain a third subnet for the Private Link endpoint

If there is a NSG or or firewall around the VNet, ports 443, 6666, 3306, and 8443–8451 must be enabled for egress

If there is a NSG policy in place, the same ports must allow ingress on the subnet where the private endpoint is located

Showing how to implement private connectivity is beyond the scope of this article and the details also depend on whether a standard of simplified deployment is chosen. However, the documentation offers a detailed guide on how to do it:

IP Access Lists (Requires Premium Plan)

The second mechanism we can use to prevent unauthorised access is IP access lists, which allow us to restrict access to only existing secure networks.

We can create access lists for both the account console and workspaces themselves either via the UI or the CLI:

Access lists for the account console: Databricks accounts are managed both through the Azure portal and the Databricks account console, each serving different purposes (Databricks Account Management). IP Access lists for the account console can be added in the Settings section of the account console.

Access lists for workspace: Access lists for workspaces can only be created and managed via the Databricks CLI or IP Access Lists API as shown here.

It is possible to create both allow and block lists. Block lists are checked first and if the connection is not blocked, in a second step, the IP addresses are compared against the allow list.

Firewall rules

If your company uses firewalls to block traffic to specific domains, there are two options for enabling access to the resources:

Allowing HTTPS and WebSocket traffic to “*.azuredatabricks.net”

Allowing traffic only to specific Databricks workspaces. For this, we need to identify the workspace domains and allow HTTPS and WebSocket traffic to them. The first can be found in the Databricks resource in the UI and the second one is the same but with the “adb-dp-” prefix instead of “adb-”

2. Clusters to Compute Plane and other Azure Resources

There are three features that can be used to configure the interaction between the control and compute plane for easier networking administration and enhanced security:

Secure Cluster Connectivity

Before covering this topic, it is important to mention that regardless of whether Secure Cluster Connectivity is enabled or not, the network traffic between the classical compute plane and the control plane traverses the Microsoft Network Backbone.

By enabling secure cluster connectivity, we can achieve two additional things:

The VNets of the clusters have no open ports

The compute resources have no public IP addresses

The connection from each cluster to the control plane is established using relay via HTTPS on port 443. This setup facilitates secure communication for cluster administration tasks without exposing any public endpoints.

Secure Cluster Connectivity can also be enabled for existing workspaces as described here.

VNet Injection

Using VNet injection for Azure Databricks involves deploying the Databricks workspace within your own AzureVnet instead of the default managed VNet.

How to do this in the best way possible is a topic in its own and detailed documentation can be found here. What is important to know is that by deploying Databricks into your own VNet, you can achieve 5 things:

More secure connection to other Azure services by using Azure Private Endpoints

Ability to use user-defined routes to connect to on-premise resources

Ability to inspect and restrict / allow outbound traffic

Integration with custom DNS

Ability to use networks security groups (NSG) to configure outbound traffic restrictions

Private Connectivity (Requires Premium Plan)

As described in the frist section, the same mechanism we can use to enable users to to securely access the Databrick workspaces, can also be used to enable private connectivity between clusters on the classic compute plane and the control plane.

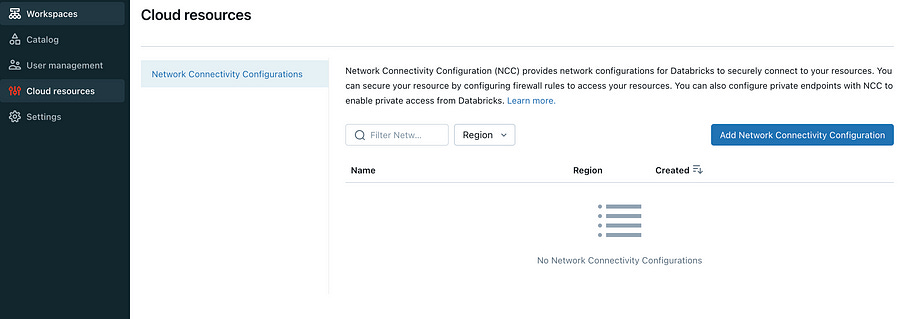

3. Serverless Compute to Control Plane and other Azure Resources

In contrast to resources in the classical compute plane, which reside outside of the Databricks account, the serverless compute plane is automatically managed by Databricks.

According to the documentation: “Connectivity between the control plane and the serverless compute plane is always over the cloud network backbone and not the public internet”.

Therefore, setting up serverless resources does not require any specific configuration to ensure secure traffic within Databricks.

However, if we want to use SQL warehouses in Databricks to access other Azure resources securely, we can configure network connectivity configurations (NCCs) to create private endpoints for the serverless resources. How to enable serverless resources to access other Azure resources behind is described here.

4. Databricks Connection to On-Premise Resources

Apart from securing traffic within Azure and between Databricks and Azure resources it is equally important to establish a secure connection between Azure Databricks and on-premises resources.

This process involves connecting on-premises networks to Azure VNets through either ExpressRoute or a Site-to-Site VPN connection.

To achieve this, traffic is routed through a transit VNet using a hub-and-spoke topology, ensuring secure data flow between the on-premises network and the Azure Databricks workspace.

The process of setting up the connection then involves several key steps:

Setting Up a Transit Virtual Network

A transit VNet equipped with an Azure Virtual Network Gateway can be configured using ExpressRoute or a VPN. If an existing gateway is available, it can be used to establish the connection. Otherwise, the a new gateway must be set up.

2. Peering Virtual Networks

The next step involves peering the Azure Databricks VNet with the transit VNet. This peering allows the Databricks workspace to use the transit VNet’s gateway for routing traffic to the on-premises network.

Specific settings such as enabling remote gateways and allowing gateway transit are essential for successful peering. In cases where standard settings fail, additional configurations like allowing forwarded traffic can be used.

3. Creating User-Defined Routes

Custom routes need to be defined and associated with the Azure Databricks VNet subnets. These routes ensure that traffic from the cluster nodes is correctly routed back to the Databricks control plane. This involves creating a route table, enabling BGP route propagation, and adding user-defined routes for various services required by Azure Databricks.

A detailed tutorial on how to set up the connection to on-premise resources can be found here.

Summary

In this article, we have covered the most important networking topics for Databricks, demonstrating where to find and configure these settings in the Azure Portal. We explored various aspects of Databricks networking, including the managed resource groups, public and private workspace setups, VNet injection, secure cluster connectivity, and serverless compute plane configurations.

In an enterprise context, these configurations are typically automated using Infrastructure as Code (IaC) tools like Terraform or ARM templates to avoid manual errors and streamline the deployment process. Additionally, many of the advanced networking features required to meet corporate compliance standards necessitate a premium Databricks subscription, which incurs higher costs compared to the standard plan.

By understanding these networking concepts and configurations, organizations can ensure secure, compliant, and efficient use of Databricks within their enterprise environments.